Overview: Andy Jassy, AWS, and the new Amazon AI chip

Amazon Web Services CEO Andy Jassy announced that Amazon’s custom AI chip, built to compete with Nvidia GPUs, has already produced multibillion-dollar revenue. The announcement, made on December 3, 2025, underscores AWS’s push into custom silicon for cloud AI workloads.

This story names key actors up front, the chip itself, and the scale of the business. Andy Jassy represents AWS and Amazon. Nvidia supplies the dominant GPU designs for training and inference today. AWS’s chip aims to reduce costs, tune performance for certain workloads, and give Amazon more control over its AI infrastructure.

What AWS said and why it matters

Andy Jassy’s comment signals commercial traction for Amazon’s in-house hardware. Multibillion-dollar revenue means customers are running real AI workloads on the new chip at meaningful scale. For ordinary readers, that is a sign the major cloud provider is moving beyond experiment and into full production deployment.

Key facts you should know are simple. AWS is investing in custom silicon to change cost structures and product options. Nvidia remains a leading supplier of GPUs for many AI tasks, but AWS now offers another path for customers who use Amazon cloud services.

Why AWS is building custom chips

AWS has several reasons to design its own AI processors. Each reason affects cost, performance, and market choice.

- Cost control, Amazon can reduce its dependence on third party chip makers and capture more of the hardware margin.

- Product differentiation, custom chips let AWS offer features or price points that are unique to its cloud services.

- Performance tuning, silicon created for specific neural network patterns can be more efficient for those tasks than general purpose GPUs.

- Vendor independence, having an internal option gives AWS more leverage in negotiations and supply planning.

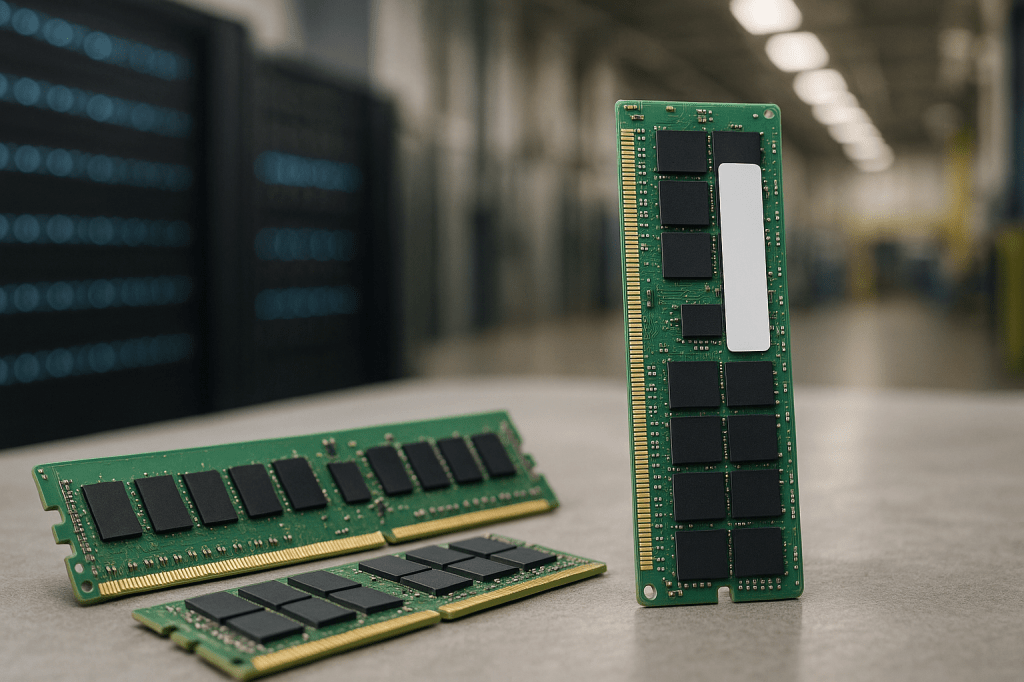

Technical context: chips for training versus inference

Understanding the difference between training and inference helps explain where AWS chips might fit.

- Training is the phase where a model learns from data. It usually needs a lot of raw compute and memory bandwidth. Nvidia GPUs are optimized for dense matrix math and have been the standard for large scale training.

- Inference is the phase where a trained model makes predictions. It can often be optimized for latency and cost per request. Custom chips can deliver strong efficiency gains for inference workloads.

AWS’s chip competes with Nvidia in some workload segments, especially inference and certain types of optimized training. Performance varies by model type, software stack, and system configuration. Benchmarks and real world customer results are the best guide to how chips compare in practice.

Market impact on Nvidia and the cloud ecosystem

AWS’s in-house chip business at multibillion-dollar scale will influence several market dynamics.

- Pricing pressure, a major cloud provider offering alternative hardware can create competitive pricing for AI compute.

- Vendor share, large customers may split workloads across different architectures depending on cost and performance.

- Supply chain effects, demand for Nvidia GPUs could shift for certain cloud workloads, while other suppliers may see new opportunities.

This does not mean Nvidia will lose its leading position overnight. Nvidia GPUs remain central to many large scale AI training pipelines. The new AWS chip adds choice and may accelerate price and product innovation.

Which customers benefit most

Adoption of AWS’s chip will vary by customer type.

- Large scale cloud users that run billions of inference requests can gain from lower cost per operation.

- Technology startups that are sensitive to compute cost may move to AWS hardware if it reduces expenses while maintaining acceptable performance.

- Enterprise AI teams with long running workloads can tune their deployments for price and latency on either architecture.

Migration considerations include model compatibility, retraining costs, and changes to the software stack. Customers need tooling and libraries that map existing models to the new hardware with minimal disruption.

Ecosystem and tooling: the software side matters

Hardware is only useful if software makes it easy to use. Adoption will hinge on developer experience, libraries, and integration with popular frameworks.

- APIs and SDKs that make it simple to port models are essential for broader uptake.

- Performance tuned libraries for common operations help customers see immediate benefits.

- Clear benchmarks and reference architectures reduce the risk for teams evaluating a move.

AWS already has deep software and cloud management tools. Extending those tools to support its chip smoothly will be important to convert trial users into long term customers.

Economic effects for cloud pricing and margins

Custom silicon can change how cloud providers think about margins and pricing.

- If AWS reduces its hardware costs, it can either lower prices to gain market share, or keep prices stable to increase margin.

- Customers may see specialized pricing tiers for different hardware options, with cost benefits for workloads that can use AWS chips.

- Competitive moves by other cloud providers may follow, including their own custom chips or new partnerships with GPU vendors.

These shifts will play out over multiple quarters, and the effects will vary across industries and customer sizes.

Risks and unknowns

Several open questions remain about the long term effect of AWS’s chip business.

- Performance parity. How closely can Amazon match Nvidia across a wide range of models and training tasks?

- Benchmark transparency. Independent, repeatable benchmarks are needed for customers to make informed choices.

- Supply and scaling. Can Amazon produce or source enough silicon and supporting components to grow this business without interruptions?

- Regulatory and antitrust scrutiny. Large tech firms expanding into hardware and services may attract attention from regulators who study competition and market power.

These risks do not negate the achievement of multibillion-dollar revenue, but they frame how sustainable and transformative the move might be.

Historical parallels and context

There are precedents for cloud or platform companies designing their own chips.

- Google created the TPU to accelerate machine learning inside its cloud and services. That effort changed options for training and inference in Google Cloud.

- Apple moved to its own M series processors for Macs, optimizing power and performance for its software ecosystem.

Those moves show that custom silicon can be effective when paired with strong software integration and clear business reasons for change. AWS’s announcement follows a similar logic, adapted to cloud scale AI.

Key takeaways

- Andy Jassy said Amazon’s custom AI chip has reached multibillion-dollar revenue, showing early commercial traction.

- AWS builds custom silicon for cost control, differentiation, and performance tuning, especially for inference workloads.

- Nvidia remains a leader for many training tasks, but AWS’s chip adds choice and potential pricing pressure.

- Customer adoption depends on software, libraries, and clear benchmarks that show when the AWS chip makes sense.

- Risks include performance parity, supply scaling, and regulatory attention.

FAQ

Is Nvidia outcompeted by AWS now?

No. Nvidia continues to lead in many training scenarios. AWS’s chip expands options for certain workloads, and competition will influence pricing and product choices.

Will this make cloud AI cheaper for consumers?

Potentially. If AWS lowers costs and passes savings to customers, some AI services could become more affordable. The full effect depends on pricing choices and competition from other cloud providers.

Can I move my models to AWS’s chip easily?

That depends. Models that fit supported frameworks and use common operations will move more easily. AWS needs strong migration tools and documentation for wide adoption.

Conclusion

Andy Jassy’s announcement that Amazon’s AI chip business has reached multibillion-dollar revenue is a clear signal that custom silicon is more than an experiment at AWS. For cloud customers and the broader AI ecosystem, the news means more choice, potential price competition, and a renewed focus on software and benchmarking. How this changes long term market share and performance equations will depend on customer adoption, supply scale, and transparent comparisons between architectures.

For ordinary readers, the main takeaway is that major cloud providers are investing in hardware to control costs and offer distinct products. That will shape how AI services are priced and delivered in the years ahead.

Leave a comment