Quick overview

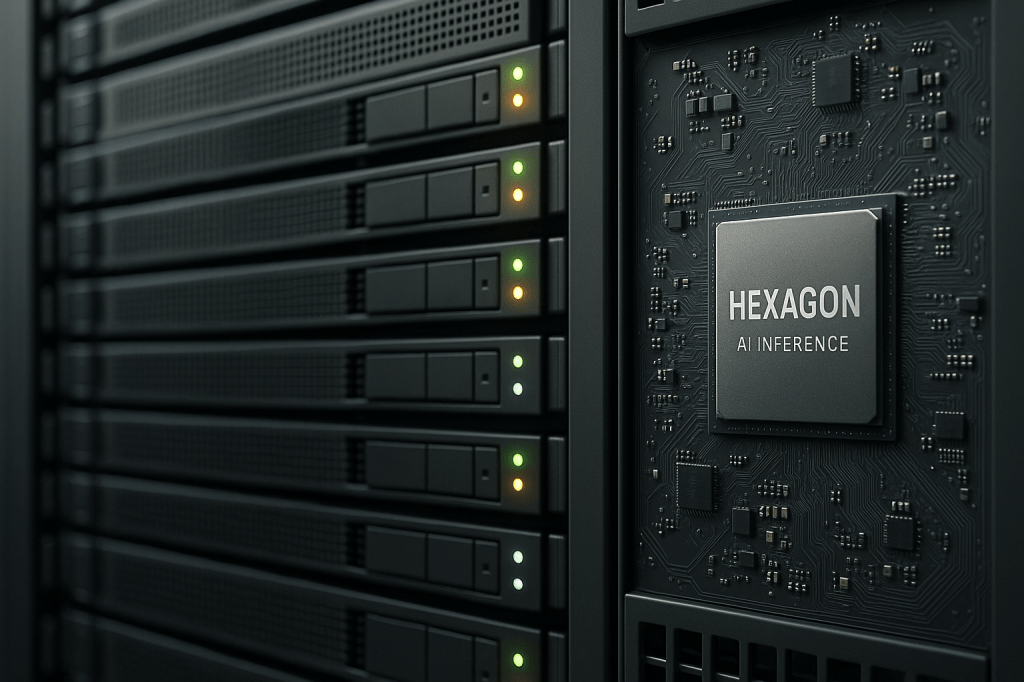

Qualcomm announced two new server-oriented AI chips, the AI200 arriving next year and the AI250 due in 2027. Both processors reuse the companys Hexagon neural processing technology, which currently powers AI features in Qualcomm phones and laptops. The goal is inference at datacenter scale, with rack deployments of dozens of chips and an emphasis on energy efficiency.

Qualcomm also named an early deployment partner, Humain, which is backed by Saudi Arabias Public Investment Fund. The first machines based on these chips are intended for inference tasks, not training, and come in two initial models called A1200 and A1250 with differing memory and power profiles.

What Qualcomm announced and why it matters

At a high level Qualcomm is reworking the Hexagon neural processing unit, often used in mobile devices, and packaging it for rack-scale servers. The two announced products are the AI200 family, which is expected next year, and the AI250 family, planned for 2027. Qualcomm says these chips focus on inference workloads, which are the step that runs trained models to deliver responses in apps and services.

This is significant because inference makes up a large share of AI compute costs in production, across search, chatbots, recommendation systems, and many edge services. Qualcomm aims to compete with GPU makers such as Nvidia and AMD by offering lower power use and different economics for inference-heavy workloads.

Key facts up front

- Products: AI200 (coming next year) and AI250 (2027).

- Foundation: Built on Qualcomm Hexagon neural processing units, adapted from mobile devices.

- Models mentioned: A1200, which offers 768 gigabytes of RAM; A1250, which promises much lower power consumption.

- Scale: Designed to be deployed in racks with up to 72 chips acting as a single compute unit.

- Early partner: Humain, backed by Saudi Arabias Public Investment Fund, has committed to deployment.

Product details and form factors

Qualcomm is packaging multiple Hexagon NPUs into server modules designed for datacenter racks. The architecture supports combining many modules to form a larger logical cluster, with Qualcomm describing setups up to 72 chips in a single rack unit. That arrangement is meant to be competitive with GPU-based servers when running inference workloads.

The A1200 configuration that was mentioned includes 768 gigabytes of RAM. Qualcomm also highlighted a variant called the A1250, which is targeted at a major improvement in energy efficiency per inference. The focus on memory and power shows the company is aiming to win cost and efficiency at scale.

Hexagon lineage and how these chips differ from GPUs

Hexagon is Qualcomms long-standing neural processing technology, originally designed for smartphones and laptops to accelerate on-device AI like camera features and voice processing. For the AI200 and AI250 families, Qualcomm repurposed and scaled that NPU design for datacenter inference.

There are a few important differences between Hexagon-based inference chips and GPU-based servers:

- Workload focus, these chips are optimized for inference, not for the heavy matrix math work of training models.

- Energy and operational cost, Qualcomm is emphasizing lower power consumption per inference, which can lower running costs in production environments.

- Form factor, they are packaged as dense rackable units meant to be combined into larger clusters.

Commercial traction and the Humain partnership

Qualcomm already has a named deployment partner, Humain. Humain is an AI infrastructure and services company backed by the Saudi Public Investment Fund. The commitment to use Qualcomms AI200 and AI250 chips signals regional investment in alternative AI hardware beyond established GPU vendors.

This partnership highlights two trends. First, cloud and regional infrastructure buyers are exploring hardware variety to reduce dependency on single vendors. Second, national investment funds or large sovereign investors can accelerate adoption by providing demand and capital for new server platforms.

Market implications and who might be affected

If Qualcomms inference chips deliver on energy and cost promises, they could change the economics of running production AI systems. Companies that run large-scale inference at low latency, such as content platforms, search services, telcos, and AI API providers, could benefit from lower operating costs.

For existing GPU leaders, competition in inference could pressure margins and prompt more focus on power efficiency. For smaller cloud providers and regional governments, alternative suppliers give more options for building AI infrastructure.

Technical and ecosystem questions to watch

Several practical issues will determine how strongly these chips compete with GPUs and other accelerators.

- Software and model support, success depends on toolchains, model runtime compatibility, and ease of porting popular models to Hexagon-based servers.

- Performance benchmarks, independent comparisons versus GPU inference in real-world workloads matter for buyer decisions.

- Developer adoption, libraries and frameworks need to be available and well supported for data scientists and engineers to move workloads.

- Supply chain and delivery, scaling from announcement to mass deployment in datacenters requires manufacturing capacity and supply agreements.

Geopolitical context and infrastructure investment

The early partnership with Humain, linked to Saudis Public Investment Fund, shows how regional policy and investment can shape AI infrastructure choices. Countries looking to build local AI compute capability may back diverse hardware suppliers for reasons including cost, local control, and resilience.

That dynamic can push global hardware makers to offer options that address local procurement, energy cost, and regulatory preferences. It also means hardware choices may reflect a blend of technical performance and strategic considerations.

Potential downsides and limits

There are reasons to be cautious about assuming immediate disruption. First, the chips are aimed at inference, not training. Training remains a large GPU-driven market, and most model development workflows still rely on GPU clusters.

Second, converting mobile NPU designs to datacenter environments is nontrivial. The software stack, orchestration, and operational tooling will need to be robust to match established vendors. Finally, buyers will want independent performance and efficiency tests before rearchitecting infrastructure.

What this means for ordinary users and businesses

For most everyday consumers the change will be indirect. If Qualcomms approach reduces data center costs for inference, services that rely on real-time AI could become cheaper to operate, which may influence pricing or the speed at which new AI features are deployed.

For businesses operating production AI, particularly those with large volumes of inference, the emergence of efficient inference chips could lower running costs and offer procurement alternatives. Telcos and edge providers may find Hexagon-based servers attractive because of power efficiency claims.

Short timeline to watch

- Next year, the AI200 family is expected to reach the market.

- In 2027, Qualcomm plans to introduce the AI250 family.

- Look for initial performance and efficiency benchmarks, software ecosystem updates, and early deployment reports from partners like Humain.

Key takeaways

- Qualcomm announced AI200 and AI250 families built on its Hexagon NPUs, targeting inference in datacenters.

- The A1200 model includes 768 gigabytes of RAM, while the A1250 is aimed at much lower power use per inference.

- Qualcomms design supports rack deployments with up to 72 chips acting as a single unit, offering a different compute option from GPU servers.

- Humain, backed by the Saudi Public Investment Fund, is an early deployment partner, which highlights regional infrastructure investment choices.

- Critical challenges remain, including software support, benchmarks against GPUs, and manufacturing scale.

FAQ

Are these chips for training or inference?

They are designed for inference, the step that runs trained models to produce outputs for users and applications. They are not positioned as training accelerators.

Will these chips replace GPUs?

Not immediately. GPUs remain central to model training and many production use cases. Qualcomms chips aim to be a competitive option for inference workloads where energy efficiency and cost per inference matter.

When will I see services using these chips?

Early deployments should appear after the AI200 launch next year, depending on how quickly partners roll out infrastructure. Wider adoption will depend on benchmarks, software support, and supply scaling.

Conclusion

Qualcomms announcement shows an evolution in how established mobile chip architects are approaching datacenter AI. By adapting Hexagon NPUs for rack-scale inference, Qualcomm wants to offer a more power efficient and cost effective option for production AI services. The early partnership with Humain suggests demand from large regional investors for hardware diversity.

The decisive factors will be software support, real-world benchmarks, and whether the energy efficiency claims hold up in production. If those pieces come together, the market for inference hardware could become more varied, which would affect costs and choices for cloud providers, telcos, and enterprises that run AI at scale.

Leave a comment