Overview: What happened and who is involved

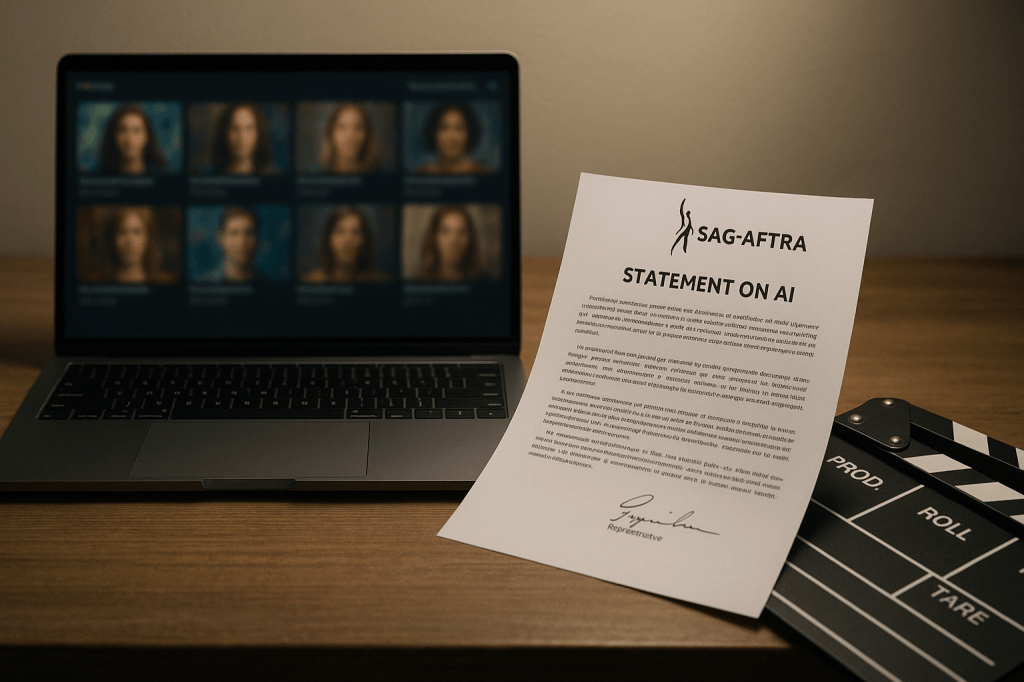

Actor Bryan Cranston, the union SAG-AFTRA, and OpenAI issued a joint statement after AI-generated videos of Cranston appeared using OpenAI’s Sora 2 system. One of the generated clips showed a fictionalized Cranston taking a selfie with an AI recreation of Michael Jackson. The incident triggered public concern from talent agencies and performers about how AI tools can create convincing likenesses and voices without permission.

The statement included Bryan Cranston, OpenAI, SAG-AFTRA, and major talent agencies. OpenAI acknowledged the unintentional generations, apologized, and said it will strengthen guardrails for likeness and voice use. SAG-AFTRA used the moment to stress the need for legal protections, and it pointed to proposed legislation such as the NO FAKES Act, which stands for Nurture Originals, Foster Art, and Keep Entertainment Safe Act.

Why this matters to everyday people

AI systems that generate images and audio are improving quickly. When companies can create realistic videos or voice clips of people who did not consent, there are risks for privacy, reputation, and misinformation. For actors and public figures, this affects how their image and voice are used. For the public, it affects trust in media and in what we see or hear online.

What OpenAI said and what changed

OpenAI expressed regret for the unintentional generations and committed to stronger protections. The company said it will expedite review of complaints about unauthorized likenesses and will refine opt-in and opt-out controls for people who do not want their likeness or voice used by the model.

The joint statement indicates agreement between the parties that current systems need clearer safeguards, and that OpenAI will act to reduce the chances of further incidents while review processes are improved.

Key elements of the announced changes

- Faster complaint reviews, with priority handling for high profile or clearly unauthorized material.

- Stronger guardrails and model updates to limit generations that attempt to recreate a known person’s face or voice without permission.

- Plans to improve how opt-in and opt-out controls work, making it easier for performers to register their preferences.

- Ongoing conversations between OpenAI, talent agencies, and SAG-AFTRA about enforcement and monitoring.

Opt-in versus opt-out, simple definitions

Opt-in means a person gives explicit permission before a platform or model can generate content using their likeness or voice. Opt-out means a person’s likeness is allowed by default unless they take steps to block it. Performers and unions prefer systems where consent is explicit, because it gives people control over how models use their identities.

Industry reaction and why the joint statement is notable

Talent agencies had criticized OpenAI after Sora 2’s earlier release. Their participation in the joint statement signals a partial reunion between the tech company and the entertainment industry, at least on the issue of tightening protections.

The agencies and SAG-AFTRA still want statutory protections. The joint statement does not remove that demand, but it does show that OpenAI is taking some immediate steps to address the problem while discussions about broader legal rules continue.

Legal context: NO FAKES Act and calls for law

SAG-AFTRA highlighted proposed legislation such as the NO FAKES Act, short for Nurture Originals, Foster Art, and Keep Entertainment Safe Act. That proposal would create statutory limits and potential liability for creating and distributing deepfakes that misuse a person’s likeness or voice without clear consent.

Even with company-level policy changes, unions and performers are pushing for laws so rights are enforceable in court. The open question is how quickly lawmakers will act, and what specific rules they will set for generative AI tools.

Broader implications for creators, platforms, and the public

This agreement could set expectations about how major AI companies handle unauthorized likenesses. If one large provider tightens controls, others may follow. That could reduce the frequency of obvious deepfakes, or at least make it easier for affected people to have content removed.

At the same time, technical limits are imperfect. Bad actors may still attempt unauthorized generations. Effective protections will likely require a mix of company policy, monitoring tools, user reporting, and legal rules.

Practical guidance for performers, studios, and creators

- Contracts: Add clear clauses about AI likeness and voice use, specifying whether opt-in or opt-out applies and what approvals are required.

- Registering preferences: Use any platform tools to opt out if you do not want your likeness used, and follow up on confirmation of registration.

- Monitoring: Set up alerts and regular checks for unauthorized uses, including short form video platforms and social media.

- Complaint workflow: Save evidence and use expedited reporting channels when a platform offers them. Keep records of takedown requests and responses.

- Copyright and publicity rights: Consult legal counsel about both intellectual property and publicity rights in your jurisdiction, since remedies vary by place.

Next steps and open questions

- Enforcement details. How fast will OpenAI remove or block unauthorized content, and what standards will it use to decide? The company promised expedited reviews, but timelines were not fully outlined.

- Transparency on processes. People will want clear, public descriptions of opt-in and opt-out controls, and of how complaint decisions are made.

- Legal developments. Will proposed laws like the NO FAKES Act move forward, and if so, what terms will they set for liability and penalties?

- Industry norms. Will other large AI platforms adopt similar rules, or will practices differ widely across developers?

Key takeaways

- OpenAI generated videos of Bryan Cranston using its Sora 2 system, including a fictional selfie with Michael Jackson, prompting a public response.

- OpenAI, Bryan Cranston, SAG-AFTRA, and major talent agencies issued a joint statement. OpenAI apologized for unintentional generations and vowed to strengthen protections and speed up complaint reviews.

- Opt-in means explicit permission is required; opt-out means people must take action to block use. Performers and unions favor opt-in systems for better control.

- SAG-AFTRA and agencies are pushing for legal protections such as the NO FAKES Act. Company policy changes may help, but they do not replace law.

- Creators and performers should update contracts, use opt-out tools if available, monitor for misuse, and document complaints carefully.

FAQ

Will OpenAI delete all unauthorized videos of Bryan Cranston?

OpenAI said it will expedite reviews and strengthen protections, but the joint statement did not promise an automatic deletion of all past generations. A complaint process will be used to evaluate and remove unauthorized content where policy or law supports removal.

Does this change mean other companies must follow?

No, the statement affects OpenAI and the parties that signed it. However, it creates pressure for other AI providers to adopt similar safeguards. Industry norms often spread when leading companies act, but there is no universal requirement yet.

What should a performer do if their likeness is used without permission?

Use the platform complaint tools, document the instance, notify representation or union staff, and seek legal advice if necessary. If a platform offers expedited review, request that option.

Conclusion

The incident involving Bryan Cranston and OpenAI’s Sora 2 prompted a public, joint response from the actor, his union SAG-AFTRA, major talent agencies, and OpenAI. OpenAI apologized and pledged faster complaint handling and stronger guardrails for likeness and voice use. The event highlights the gap between fast-moving AI capabilities and existing rules for consent and rights.

For performers, creators, and the public, the immediate steps are practical. Use platform tools, update contracts, monitor content, and press for clear legal rules. Longer term, lawmakers and industry groups will likely play a major role in defining how AI can and cannot use a person’s likeness or voice.

Leave a comment