What happened and who is involved

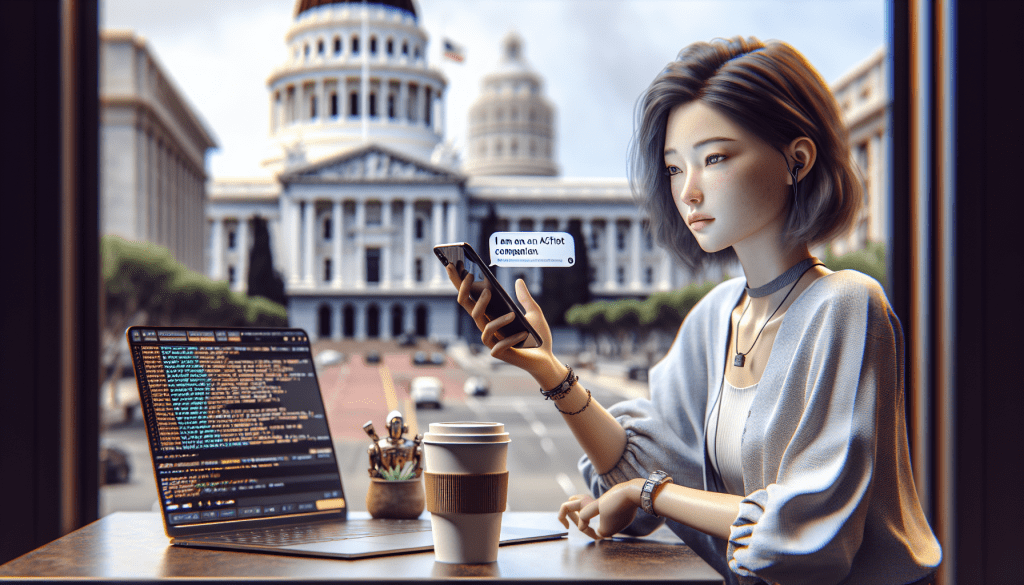

California Governor Gavin Newsom signed Senate Bill 243 into law on October 13. The measure is the first of its kind in the United States to require certain AI chatbots to clearly identify themselves as artificial intelligence when a reasonable person could be misled into thinking they are human.

The law focuses on so called companion chatbots, and it adds reporting requirements for some operators to the state Office of Suicide Prevention. The goal is to protect children and other vulnerable users, and to build on California’s recent AI transparency efforts.

Why this matters to ordinary users

If you use a chatbot that is marketed or designed to be a companion, the new law could mean the app or service must display an obvious notice telling you it is driven by AI. That notice is meant to reduce confusion, set clearer expectations, and help people identify whether they are interacting with software rather than another person.

The requirement also brings mental health safeguards into play. Some chatbot operators will need to submit annual reports showing how they detect and respond to users who express suicidal thoughts, which aims to protect vulnerable users and guide safer product design.

What SB 243 requires

- Companion chatbots must clearly and conspicuously disclose they are AI when a reasonable person could be misled into thinking they are human.

- Certain operators must file annual reports with the California Office of Suicide Prevention, describing safeguards to detect and respond to suicidal ideation among users.

- The law builds on prior state efforts to increase transparency for AI systems, aligning disclosure and safety measures with broader regulatory momentum.

What counts as a clear and conspicuous disclosure

The statute requires that the disclosure be prominent enough that a typical user will notice it. The law does not rely on hidden notices in terms of service, small print, or buried menus. It aims for messages that are easy to see and understand at the point of interaction.

Scope and definitions: what is a companion chatbot

The law targets “companion” chatbots. In practice, that label tends to apply to conversational agents that are designed to provide friendship, company, or emotional support. Examples include apps that position a bot as a friend, confidant, or ongoing conversational partner.

Not every chatbot will be covered. Tools that perform transactional tasks like booking appointments, answering simple factual questions, or assisting with productivity are less likely to be within the law’s focus unless they are presented as companions or set up in a way that could make a reasonable person think they are human.

Reporting obligations and mental health safeguards

Beginning next year some operators will have to submit annual reports to California’s Office of Suicide Prevention. The reports must detail measures the operator has put in place to detect and respond to users who express suicidal ideation.

Required topics for the reports include how the system detects warning signs, what steps are taken when a risk is identified, and the training or tools used to route users to appropriate resources. The aim is to encourage responsible safety practices in services that interact with emotionally vulnerable people.

Timeline and compliance windows

The law was signed on October 13, and the disclosure requirement is expected to take effect in a defined compliance window starting next year. That means developers and operators have a short lead time to implement visible disclosures and to establish reporting processes for the mental health safeguards.

Companies should watch for administrative rules and guidance from state agencies, which will clarify deadlines, reporting formats, and other operational details.

Enforcement and penalties

SB 243 creates new obligations but leaves some enforcement questions open. State agencies will be involved in monitoring and receiving reports, and agencies may develop enforcement mechanisms or pursue penalties for noncompliance.

At present the law emphasizes disclosure and safety compliance. Detailed enforcement processes, fines, or civil remedies will depend on how California regulators implement the statute and how courts interpret any disputes.

What this means for companies and developers

Businesses that build, operate, or host companion chatbots may need to make several changes quickly. Those changes include product design updates, technical safeguards, policy adjustments, and compliance documentation.

- Labeling and UI updates. Add clear, readable statements in the chatbot interface that inform users they are interacting with an AI system.

- Safety tooling. Invest in content moderation, risk detection, and escalation mechanisms for suicidal ideation and other high risk behaviors.

- Reporting pipelines. Create internal processes to gather evidence, metrics, and written descriptions for annual reports to the Office of Suicide Prevention.

- Legal and privacy review. Ensure disclosures align with privacy policies, consumer protection laws, and other regulatory requirements.

- Costs and resources. Small startups may find compliance costs heavier than larger incumbents because of engineering, legal, and reporting work needed on a tight schedule.

User safety and trust

Explicit disclosure can change how people use chatbots. When users know a companion is a machine, they may trust it differently, share less personal information, or treat its suggestions with greater caution. For children and vulnerable adults, clear labeling aims to reduce the risk of forming unhealthy attachments to machines that are not trained to replace human care.

Safety reporting expectations add another layer of protection, encouraging operators to detect crises and direct users to qualified human help when needed.

Interplay with other laws and trends

SB 243 follows earlier California measures such as SB 53, which focused on AI transparency. The new companion disclosure law fits into a broader push by states and federal agencies to regulate AI behavior and to require clearer explanations about how automated systems operate.

Companies operating across state and national borders will need to track multiple rules. California laws often influence other jurisdictions, so similar requirements could appear elsewhere.

Potential loopholes and criticisms

- Edge cases. Some services may avoid the “companion” label while providing companion like experiences, creating ambiguity about coverage.

- International users. California law applies within the state, but services available globally may struggle to present different disclosures by location.

- Enforcement challenges. Monitoring all chatbots and verifying compliance could be resource intensive for regulators.

- Industry pushback. Companies may argue the rules are vague or that they will increase costs without clear public safety benefits.

Actionable guidance for developers

- Create clear disclosure copy. Use plain language near the start of the conversation to say the user is interacting with an AI. Example phrasing could be a simple sentence such as This is an AI companion, not a human.

- Design visible UI elements. Make disclosures readable on mobile and desktop, and avoid burying them in settings or legal text.

- Build safety detection. Implement algorithms and human review workflows to flag suicidal intent and to escalate appropriately.

- Prepare reporting systems. Collect logs, incident summaries, and metrics that can be compiled into annual reports for the Office of Suicide Prevention.

- Consult legal counsel. Review product messaging, privacy notices, and cross border impacts to stay compliant with state rules.

Actionable guidance for consumers

- Look for obvious labels. Check whether a chatbot presents a clear notice that it is AI when you first start a conversation.

- Protect personal details. Avoid sharing sensitive data with chatbots that are not verified human services or licensed professionals.

- Report harms. If a chatbot fails to disclose itself or gives dangerous advice, contact the app store or platform, and consider notifying consumer protection authorities.

- Seek human help for crises. If you or someone you know is at risk, reach out to qualified local services or emergency resources rather than relying on an AI companion.

Key takeaways

- California passed SB 243, signed by Governor Gavin Newsom on October 13, requiring companion chatbots to disclose they are AI when a reasonable person could be misled.

- Certain operators must file annual reports with the California Office of Suicide Prevention explaining safeguards for suicidal ideation detection and response.

- The law focuses on user safety and transparency, and it may prompt product updates, new moderation tools, and reporting processes across the industry.

- Some enforcement details and edge case definitions remain to be clarified by regulators and future guidance.

FAQ

Does this apply to all chatbots?

Not all chatbots are covered. The law targets companion chatbots or services where a reasonable person could be misled into thinking they are human. Transactional bots and purely functional assistants are less likely to fall under the rule unless they are presented as companions.

When do the rules take effect?

The law was signed on October 13, and the disclosure and reporting obligations start in the compliance window beginning next year. Operators should watch for detailed timelines from California agencies.

Who enforces the law?

State agencies will play a role in receiving reports and monitoring compliance. Specific enforcement mechanisms will be clarified through administrative guidance and regulatory action.

Conclusion

SB 243 marks a step toward clearer labeling and safer design for AI systems that are positioned as companions. By requiring visible AI disclosures and setting reporting expectations for crisis detection, California seeks to protect users, especially young people and those experiencing distress. The law will push companies to update interfaces, strengthen safety tools, and prepare documentation, while regulators and the public will watch how enforcement and definitions evolve.

For users, the change is simple to understand. When a chatbot looks like a friend or confidant, it should also make it clear that it is an AI. For developers, the message is also clear. Design with transparency and safety in mind, and be ready to show how you are protecting the people who use your service.

Leave a comment