Imagine watching a video of a famous person saying something shocking. It looks and sounds exactly like them. But it is not real. It was created by AI. This is a deepfake.

Deepfakes are becoming more common. They are part of a bigger problem: AI disinformation. This is when AI is used to create fake information. This information looks very real. It can be images, audio, or video. It goes beyond traditional fake news. It is hyper-realistic.

This article explores this threat. It looks at how deepfakes and AI disinformation challenge society. It shakes our trust in what we see and hear. It can mess with politics and elections. It can harm individuals. This is a serious problem for information integrity and public trust. We need to understand how it works. We need to see its impact. We need to find ways to fight it. This is a challenge to the very idea of truth.

Understanding AI-Generated Disinformation

What exactly are deepfakes? They are synthetic media, meaning that they are created or changed by AI. The word “deepfake” comes from “deep learning” (a type of AI) and “fake”. Deepfakes use AI to swap faces in videos or create realistic audio of someone speaking words they never said.

But AI disinformation is more than just deepfakes. AI can also write fake news articles that sound real. It can power automated social media accounts (bots) that spread false stories very quickly. It can create synthetic data, fake data that looks real, which could be used to manipulate people’s understanding of things.

The big problem with AI disinformation is its speed and scale problem. Creating realistic fake content used to take a lot of skill and time. Now, AI tools are making it easier and faster. They can create many fake videos or articles quickly. AI can also help spread this fake content rapidly across the internet through automated accounts. This makes it much harder to keep up and correct the false information.

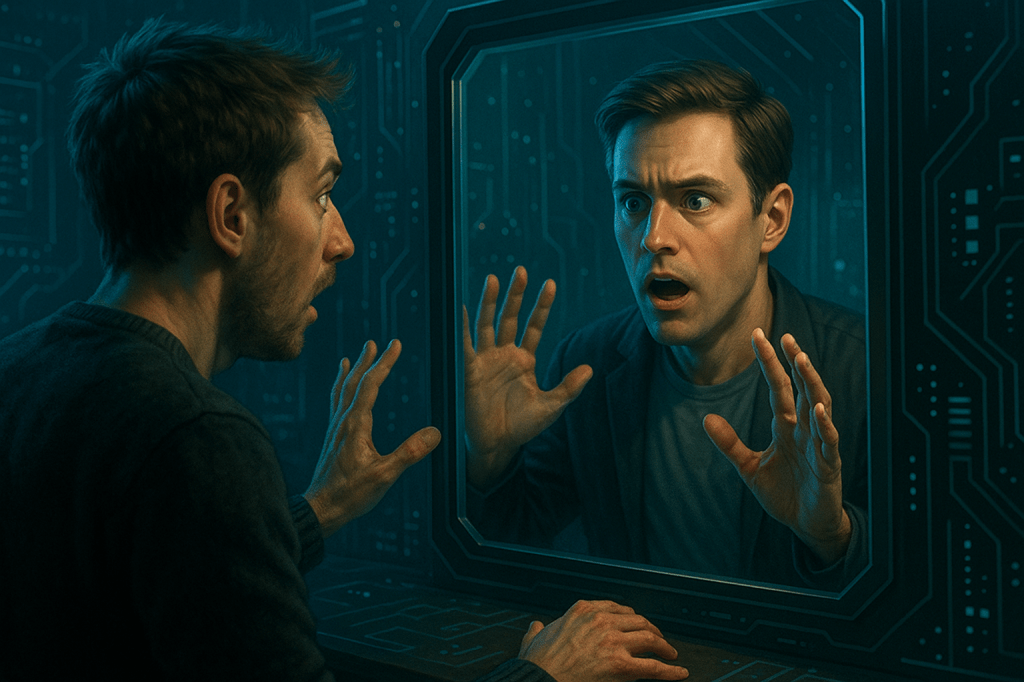

This leads to an authenticity crisis. It’s becoming harder for the average person to tell what is real and what is fake online. If you see a video of a politician saying something, can you trust it? If you hear an audio message from your boss asking for money, is it really them? AI blurs the lines. This makes people doubt everything. It damages trust in media, in leaders, and in what we see with our own eyes.

Social Impact – Eroding Trust and Manipulating Reality

The rise of deepfakes and AI disinformation has a huge social impact. It affects how we interact with information and each other.

One of the biggest impacts is on public trust. When fake videos and audio are easy to make, people start doubting everything they see and hear online. This includes news from credible sources. If a real video comes out showing something important, people might dismiss it by saying, “Oh, that’s probably a deepfake.” This is sometimes called the “liar’s dividend”. It means that even true information can be doubted because fake information exists. This damages trust in media, institutions, and even facts themselves.

This is very dangerous for politics and democracy. AI disinformation can be used to manipulate elections. Fake videos or audio of candidates saying controversial things could be released just before an election. This could sway voters. AI can also spread propaganda very effectively. It can create realistic-looking content that pushes a certain political message. This can increase polarization, making different groups in society hate each other more. It can undermine public debate by making it impossible to agree on basic facts. If people can’t agree on what’s true, how can they make informed decisions about their leaders or important issues? This is political manipulation AI.

Individuals can also be deeply harmed. One disturbing use of deepfakes is creating non-consensual fake videos, often sexual in nature, by putting someone’s face onto another body. This is a form of harassment and exploitation. It particularly targets women. It can cause immense reputational damage and emotional distress. AI can also be used in financial fraud and scams. Realistic voice cloning can be used to call someone and pretend to be a family member or boss asking for money urgently. People have lost large sums of money this way. Beyond this, just seeing fake content can cause confusion and emotional distress.

Deepfakes can also have an impact on security. They could be used by hostile governments or groups to spread false information aimed at causing panic or instability in another country. They could be used in sophisticated cyber attacks to trick people into giving up sensitive information.

The ability of AI to create realistic fakes and spread them quickly is a major threat. It is eroding trust at many levels and making it harder to tell what is real. This is the heart of the disinformation crisis.

Fighting Back: Countermeasures and Solutions

The threat of AI disinformation is serious, but people are working to combat it. It requires action on many fronts.

One area is technical detection. Researchers are developing AI tools to detect deepfakes. These tools look for subtle signs that a video or audio is fake. For example, they might look for inconsistencies in blinking patterns in a deepfake video or unnatural sounds in cloned audio. However, this is an arms race. As detection methods get better, the methods for creating deepfakes also improve to bypass detection. Other technical ideas include watermarking or provenance tracking. This means embedding hidden signals in real content or creating a secure record of where content came from to prove its authenticity. This is key for deepfake detection.

Platform responsibility is huge. Social media companies and content platforms are major channels for spreading deepfakes and other disinformation. They have a responsibility to address this. This involves developing systems to identify malicious AI-generated content. It involves clearly labeling synthetic content so users know it’s not real. It also involves removing harmful content quickly, such as non-consensual deepfakes. Platforms are under increasing pressure to improve their efforts in combating disinformation.

Policy and regulation are also needed. Governments are starting to consider laws specific to deepfakes and AI disinformation. These laws could make it illegal to create or distribute certain types of harmful deepfakes, like non-consensual ones or those intended to influence elections falsely. Laws could establish liability for platforms or creators who fail to prevent harm. Some are discussing mandating disclosure – requiring synthetic content to be clearly marked as not real. This is part of AI regulation addressing this new threat.

Media literacy is perhaps the most important long-term solution. We need to educate the public. People need to understand what deepfakes are and how AI disinformation works. They need to be critical consumers of information online. They need to learn how to spot potential fakes, even if detection tools aren’t perfect. Education campaigns in schools and for the general public can help build this resilience. This is vital media literacy.

Finally, cross-industry collaboration is essential. Tech companies developing AI tools, social media platforms, news organizations, civil society groups, and governments all need to work together. Tech companies can share information about how their AI is being misused. Platforms can share data on how disinformation spreads. News organizations can improve verification processes. Civil society can advocate for better policies and educate the public. Governments can facilitate information sharing and create frameworks. This collaborative effort is needed for effective platform responsibility AI.

The Future of Truth and Trust in the AI Age

Where are we headed with deepfakes and AI disinformation? The technology will likely get better. Creating realistic fakes will become easier and cheaper. This suggests an escalation in the information arms race.

We might see new forms of manipulation. AI could be used to create personalized disinformation tailored to individual users. It could generate synthetic social media activity that looks incredibly real, making fake narratives appear widely supported. This could lead to even more sophisticated AI-driven information warfare.

Can societies adapt? Can we build societal resilience against pervasive disinformation? This means more than just technical detection. It means a population that is critical, well-informed, and trusts credible sources. It means strong institutions and a healthy public discourse.

This points to the fundamental need for rebuilding trust. Trust has been eroding for many reasons, but AI disinformation makes it worse. We need to find ways to strengthen trust in credible news organizations, scientists, and democratic institutions. This means these sources need to be transparent, accurate, and accountable.

The future of disinformation is challenging. It will require continuous effort. It demands vigilance from everyone. Safeguarding the information integrity ecosystem is not just a technical or political issue. It is about protecting the foundation of our society – the shared understanding of reality based on facts.

Conclusion

AI-powered disinformation, especially deepfakes, poses a fundamental threat. It challenges the truth. It erodes trust. It can manipulate politics and harm individuals. This is a major disinformation crisis shaking the foundations of society.

We have seen how AI makes creating and spreading fake content easier and faster. We looked at real examples, from potential election manipulation to severe personal harm caused by non-consensual deepfakes.

Combating this threat requires a comprehensive response. We need better technical tools for detection, but we know this is an ongoing fight. Platforms must take responsibility for content shared on their sites. Governments must consider smart regulations.

Most importantly, people need to be educated. Media literacy is our best defense. Learning to question what we see and hear online is crucial.

Addressing AI disinformation is not something one group can do alone. It requires collaboration between tech, media, government, and the public. It requires continuous effort.

The challenge is significant. But by working together, focusing on education, responsibility, and smart policies, we can work to safeguard the information ecosystem and protect the truth in the AI age. Vigilance and collective action are necessary to protect AI and trust.

Leave a comment